In a multi-main setup, we have the following issue. The user's client connects to main A and runs a test webhook, so main A starts listening for a webhook call. A third-party service sends a request to the test webhook URL. The request is forwarded by the load balancer to main B, who is not listening for this webhook call. Therefore, the webhook call is unhandled. To start addressing this, cache test webhook registrations, using Redis for queue mode and in-memory for regular mode. When the third-party service sends a request to the test webhook URL, the request is forwarded by the load balancer to main B, who fetches test webhooks from the cache and, if it finds a match, executes the test webhook. This should be transparent - test webhook behavior should remain the same as so far. Notes: - Test webhook timeouts are not cached. A timeout is only relevant to the process it was created in, so another process retrieving from Redis a "foreign" timeout will be unable to act on it. A timeout also has circular references, so `cache-manager-ioredis-yet` is unable to serialize it. - In a single-main scenario, the timeout remains in the single process and is cleared on test webhook expiration, successful execution, and manual cancellation - all as usual. - In a multi-main scenario, we will need to have the process who received the webhook call send a message to the process who created the webhook directing this originating process to clear the timeout. This will likely be implemented via execution lifecycle hooks and Redis channel messages checking session ID. This implementation is out of scope for this PR and will come next. - Additional data in test webhooks is not cached. From what I can tell, additional data is not needed for test webhooks to be executed. Additional data also has circular references, so `cache-manager-ioredis-yet` is unable to serialize it. Follow-up to: #8155 |

||

|---|---|---|

| .github | ||

| .vscode | ||

| assets | ||

| cypress | ||

| docker | ||

| packages | ||

| patches | ||

| scripts | ||

| .dockerignore | ||

| .editorconfig | ||

| .git-blame-ignore-revs | ||

| .gitattributes | ||

| .gitignore | ||

| .npmignore | ||

| .npmrc | ||

| .prettierignore | ||

| .prettierrc.js | ||

| CHANGELOG.md | ||

| CODE_OF_CONDUCT.md | ||

| CONTRIBUTING.md | ||

| CONTRIBUTOR_LICENSE_AGREEMENT.md | ||

| cypress.config.js | ||

| jest.config.js | ||

| LICENSE.md | ||

| LICENSE_EE.md | ||

| package.json | ||

| pnpm-lock.yaml | ||

| pnpm-workspace.yaml | ||

| README.md | ||

| SECURITY.md | ||

| tsconfig.backend.json | ||

| tsconfig.build.json | ||

| tsconfig.json | ||

| turbo.json | ||

| vitest.workspace.ts | ||

n8n - Workflow automation tool

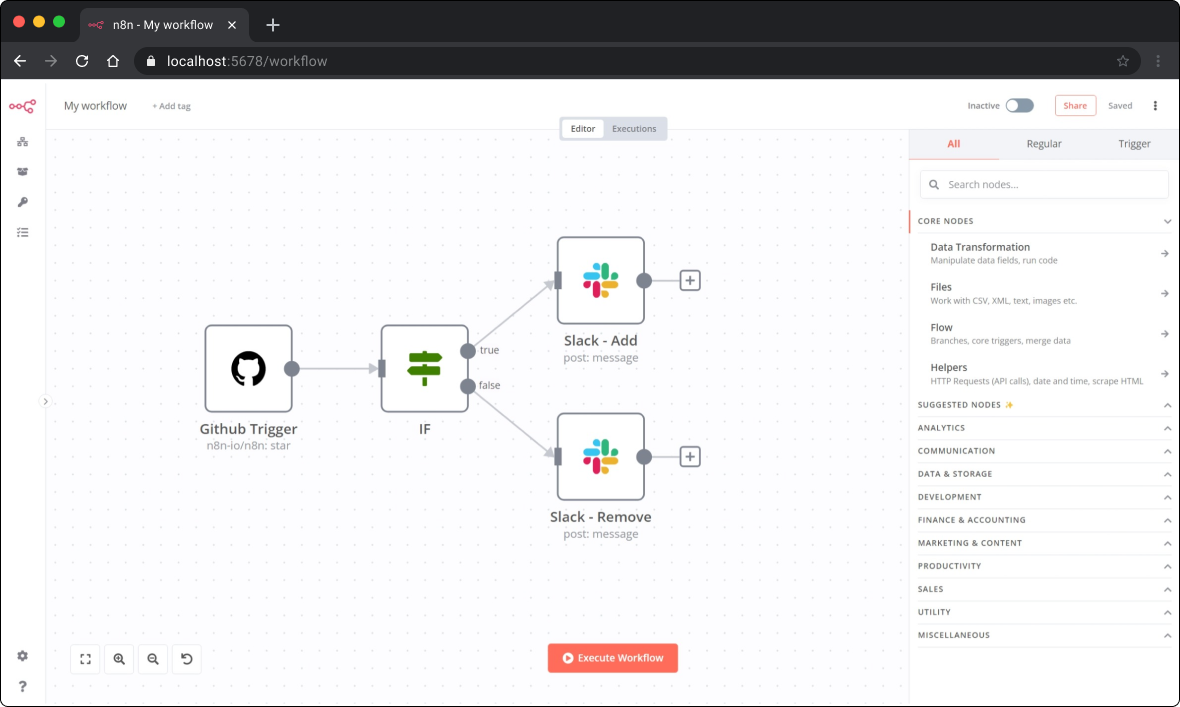

n8n is an extendable workflow automation tool. With a fair-code distribution model, n8n will always have visible source code, be available to self-host, and allow you to add your own custom functions, logic and apps. n8n's node-based approach makes it highly versatile, enabling you to connect anything to everything.

Demo

📺 A short video (< 5 min) that goes over key concepts of creating workflows in n8n.

Available integrations

n8n has 200+ different nodes to automate workflows. The list can be found on: https://n8n.io/integrations

Documentation

The official n8n documentation can be found on our documentation website

Additional information and example workflows on the n8n.io website

The release notes can be found here and the list of breaking changes here.

Usage

- 📚 Learn how to use it from the command line

- 🐳 Learn how to run n8n in Docker

Start

You can try n8n without installing it using npx. You must have Node.js installed. From the terminal, run:

npx n8n

This command will download everything that is needed to start n8n. You can then access n8n and start building workflows by opening http://localhost:5678.

n8n cloud

Sign-up for an n8n cloud account.

While n8n cloud and n8n are the same in terms of features, n8n cloud provides certain conveniences such as:

- Not having to set up and maintain your n8n instance

- Managed OAuth for authentication

- Easily upgrading to the newer n8n versions

Build with LangChain and AI in n8n (beta)

With n8n's LangChain nodes you can build AI-powered functionality within your workflows. The LangChain nodes are configurable, meaning you can choose your preferred agent, LLM, memory, and so on. Alongside the LangChain nodes, you can connect any n8n node as normal: this means you can integrate your LangChain logic with other data sources and services.

Learn more in the documentation.

Support

If you have problems or questions go to our forum, we will then try to help you asap:

Jobs

If you are interested in working for n8n and so shape the future of the project check out our job posts

What does n8n mean and how do you pronounce it?

Short answer: It means "nodemation" and it is pronounced as n-eight-n.

Long answer: "I get that question quite often (more often than I expected) so I decided it is probably best to answer it here. While looking for a good name for the project with a free domain I realized very quickly that all the good ones I could think of were already taken. So, in the end, I chose nodemation. 'node-' in the sense that it uses a Node-View and that it uses Node.js and '-mation' for 'automation' which is what the project is supposed to help with. However, I did not like how long the name was and I could not imagine writing something that long every time in the CLI. That is when I then ended up on 'n8n'." - Jan Oberhauser, Founder and CEO, n8n.io

Development setup

Have you found a bug 🐛 ? Or maybe you have a nice feature ✨ to contribute ? The CONTRIBUTING guide will help you get your development environment ready in minutes.

License

n8n is fair-code distributed under the Sustainable Use License and the n8n Enterprise License.

Proprietary licenses are available for enterprise customers. Get in touch

Additional information about the license model can be found in the docs.