mirror of

https://github.com/prometheus/node_exporter.git

synced 2025-08-20 18:33:52 -07:00

Add basic authentication (#1683)

* Add basic authentication Signed-off-by: Julien Pivotto <roidelapluie@inuits.eu>

This commit is contained in:

parent

b42819b69d

commit

202ecf9c9d

|

|

@ -2,6 +2,7 @@

|

|||

|

||||

* [CHANGE]

|

||||

* [FEATURE]

|

||||

* [FEATURE] Add basic authentication #1673

|

||||

* [ENHANCEMENT] Add model_name and stepping to node_cpu_info metric #1617

|

||||

* [ENHANCEMENT] Add metrics for IO errors and retires on Darwin. #1636

|

||||

* [BUGFIX] collector/systemd: use regexp to extract systemd version #1647

|

||||

|

|

|

|||

1

go.mod

1

go.mod

|

|

@ -23,6 +23,7 @@ require (

|

|||

github.com/soundcloud/go-runit v0.0.0-20150630195641-06ad41a06c4a

|

||||

go.uber.org/atomic v1.5.1 // indirect

|

||||

go.uber.org/multierr v1.4.0 // indirect

|

||||

golang.org/x/crypto v0.0.0-20191011191535-87dc89f01550

|

||||

golang.org/x/lint v0.0.0-20200130185559-910be7a94367 // indirect

|

||||

golang.org/x/sys v0.0.0-20200217220822-9197077df867

|

||||

golang.org/x/tools v0.0.0-20200216192241-b320d3a0f5a2 // indirect

|

||||

|

|

|

|||

2

go.sum

2

go.sum

|

|

@ -208,6 +208,7 @@ github.com/modern-go/concurrent v0.0.0-20180228061459-e0a39a4cb421/go.mod h1:6dJ

|

|||

github.com/modern-go/concurrent v0.0.0-20180306012644-bacd9c7ef1dd/go.mod h1:6dJC0mAP4ikYIbvyc7fijjWJddQyLn8Ig3JB5CqoB9Q=

|

||||

github.com/modern-go/reflect2 v0.0.0-20180701023420-4b7aa43c6742/go.mod h1:bx2lNnkwVCuqBIxFjflWJWanXIb3RllmbCylyMrvgv0=

|

||||

github.com/modern-go/reflect2 v1.0.1/go.mod h1:bx2lNnkwVCuqBIxFjflWJWanXIb3RllmbCylyMrvgv0=

|

||||

github.com/mwitkow/go-conntrack v0.0.0-20161129095857-cc309e4a2223 h1:F9x/1yl3T2AeKLr2AMdilSD8+f9bvMnNN8VS5iDtovc=

|

||||

github.com/mwitkow/go-conntrack v0.0.0-20161129095857-cc309e4a2223/go.mod h1:qRWi+5nqEBWmkhHvq77mSJWrCKwh8bxhgT7d/eI7P4U=

|

||||

github.com/nats-io/jwt v0.3.0/go.mod h1:fRYCDE99xlTsqUzISS1Bi75UBJ6ljOJQOAAu5VglpSg=

|

||||

github.com/nats-io/jwt v0.3.2/go.mod h1:/euKqTS1ZD+zzjYrY7pseZrTtWQSjujC7xjPc8wL6eU=

|

||||

|

|

@ -338,6 +339,7 @@ golang.org/x/crypto v0.0.0-20181029021203-45a5f77698d3/go.mod h1:6SG95UA2DQfeDnf

|

|||

golang.org/x/crypto v0.0.0-20190308221718-c2843e01d9a2/go.mod h1:djNgcEr1/C05ACkg1iLfiJU5Ep61QUkGW8qpdssI0+w=

|

||||

golang.org/x/crypto v0.0.0-20190510104115-cbcb75029529/go.mod h1:yigFU9vqHzYiE8UmvKecakEJjdnWj3jj499lnFckfCI=

|

||||

golang.org/x/crypto v0.0.0-20190701094942-4def268fd1a4/go.mod h1:yigFU9vqHzYiE8UmvKecakEJjdnWj3jj499lnFckfCI=

|

||||

golang.org/x/crypto v0.0.0-20191011191535-87dc89f01550 h1:ObdrDkeb4kJdCP557AjRjq69pTHfNouLtWZG7j9rPN8=

|

||||

golang.org/x/crypto v0.0.0-20191011191535-87dc89f01550/go.mod h1:yigFU9vqHzYiE8UmvKecakEJjdnWj3jj499lnFckfCI=

|

||||

golang.org/x/exp v0.0.0-20190121172915-509febef88a4/go.mod h1:CJ0aWSM057203Lf6IL+f9T1iT9GByDxfZKAQTCR3kQA=

|

||||

golang.org/x/lint v0.0.0-20181026193005-c67002cb31c3/go.mod h1:UVdnD1Gm6xHRNCYTkRU2/jEulfH38KcIWyp/GAMgvoE=

|

||||

|

|

|

|||

|

|

@ -25,4 +25,27 @@ tls_config:

|

|||

|

||||

# CA certificate for client certificate authentication to the server

|

||||

[ client_ca_file: <filename> ]

|

||||

|

||||

# List of usernames and hashed passwords that have full access to the web

|

||||

# server via basic authentication. If empty, no basic authentication is

|

||||

# required. Passwords are hashed with bcrypt.

|

||||

basic_auth_users:

|

||||

[ <username>: <password> ... ]

|

||||

```

|

||||

|

||||

## About bcrypt

|

||||

|

||||

There are several tools out there to generate bcrypt passwords, e.g.

|

||||

[htpasswd](https://httpd.apache.org/docs/2.4/programs/htpasswd.html):

|

||||

|

||||

`htpasswd -nBC 10 "" | tr -d ':\n`

|

||||

|

||||

That command will prompt you for a password and output the hashed password,

|

||||

which will look something like:

|

||||

`$2y$10$X0h1gDsPszWURQaxFh.zoubFi6DXncSjhoQNJgRrnGs7EsimhC7zG`

|

||||

|

||||

The cost (10 in the example) influences the time it takes for computing the

|

||||

hash. A higher cost will en up slowing down the authentication process.

|

||||

Depending on the machine, a cost of 10 will take about ~70ms where a cost of

|

||||

18 can take up to a few seconds. That hash will be computed on every

|

||||

password-protected request.

|

||||

|

|

|

|||

5

https/testdata/tls_config_auth_user_list_invalid.bad.yml

vendored

Normal file

5

https/testdata/tls_config_auth_user_list_invalid.bad.yml

vendored

Normal file

|

|

@ -0,0 +1,5 @@

|

|||

tls_config :

|

||||

cert_file : "testdata/server.crt"

|

||||

key_file : "testdata/server.key"

|

||||

basic_auth_users:

|

||||

john: doe

|

||||

2

https/testdata/tls_config_junk_key.yml

vendored

Normal file

2

https/testdata/tls_config_junk_key.yml

vendored

Normal file

|

|

@ -0,0 +1,2 @@

|

|||

tls_config :

|

||||

cert_filse: "testdata/server.crt"

|

||||

|

|

@ -1,3 +1,4 @@

|

|||

tls_config :

|

||||

cert_file : ""

|

||||

key_file : ""

|

||||

key_file : ""

|

||||

client_auth_type: "x"

|

||||

|

|

|

|||

8

https/testdata/tls_config_users.good.yml

vendored

Normal file

8

https/testdata/tls_config_users.good.yml

vendored

Normal file

|

|

@ -0,0 +1,8 @@

|

|||

tls_config :

|

||||

cert_file : "testdata/server.crt"

|

||||

key_file : "testdata/server.key"

|

||||

basic_auth_users:

|

||||

alice: $2y$12$1DpfPeqF9HzHJt.EWswy1exHluGfbhnn3yXhR7Xes6m3WJqFg0Wby

|

||||

bob: $2y$18$4VeFDzXIoPHKnKTU3O3GH.N.vZu06CVqczYZ8WvfzrddFU6tGqjR.

|

||||

carol: $2y$10$qRTBuFoULoYNA7AQ/F3ck.trZBPyjV64.oA4ZsSBCIWvXuvQlQTuu

|

||||

dave: $2y$10$2UXri9cIDdgeKjBo4Rlpx.U3ZLDV8X1IxKmsfOvhcM5oXQt/mLmXq

|

||||

5

https/testdata/tls_config_users_noTLS.good.yml

vendored

Normal file

5

https/testdata/tls_config_users_noTLS.good.yml

vendored

Normal file

|

|

@ -0,0 +1,5 @@

|

|||

basic_auth_users:

|

||||

alice: $2y$12$1DpfPeqF9HzHJt.EWswy1exHluGfbhnn3yXhR7Xes6m3WJqFg0Wby

|

||||

bob: $2y$18$4VeFDzXIoPHKnKTU3O3GH.N.vZu06CVqczYZ8WvfzrddFU6tGqjR.

|

||||

carol: $2y$10$qRTBuFoULoYNA7AQ/F3ck.trZBPyjV64.oA4ZsSBCIWvXuvQlQTuu

|

||||

dave: $2y$10$2UXri9cIDdgeKjBo4Rlpx.U3ZLDV8X1IxKmsfOvhcM5oXQt/mLmXq

|

||||

|

|

@ -20,12 +20,20 @@ import (

|

|||

"io/ioutil"

|

||||

"net/http"

|

||||

|

||||

"github.com/go-kit/kit/log"

|

||||

"github.com/go-kit/kit/log/level"

|

||||

"github.com/pkg/errors"

|

||||

config_util "github.com/prometheus/common/config"

|

||||

"gopkg.in/yaml.v2"

|

||||

)

|

||||

|

||||

var (

|

||||

errNoTLSConfig = errors.New("TLS config is not present")

|

||||

)

|

||||

|

||||

type Config struct {

|

||||

TLSConfig TLSStruct `yaml:"tls_config"`

|

||||

TLSConfig TLSStruct `yaml:"tls_config"`

|

||||

Users map[string]config_util.Secret `yaml:"basic_auth_users"`

|

||||

}

|

||||

|

||||

type TLSStruct struct {

|

||||

|

|

@ -35,13 +43,18 @@ type TLSStruct struct {

|

|||

ClientCAs string `yaml:"client_ca_file"`

|

||||

}

|

||||

|

||||

func getTLSConfig(configPath string) (*tls.Config, error) {

|

||||

func getConfig(configPath string) (*Config, error) {

|

||||

content, err := ioutil.ReadFile(configPath)

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

c := &Config{}

|

||||

err = yaml.Unmarshal(content, c)

|

||||

err = yaml.UnmarshalStrict(content, c)

|

||||

return c, err

|

||||

}

|

||||

|

||||

func getTLSConfig(configPath string) (*tls.Config, error) {

|

||||

c, err := getConfig(configPath)

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

|

|

@ -50,15 +63,19 @@ func getTLSConfig(configPath string) (*tls.Config, error) {

|

|||

|

||||

// ConfigToTLSConfig generates the golang tls.Config from the TLSStruct config.

|

||||

func ConfigToTLSConfig(c *TLSStruct) (*tls.Config, error) {

|

||||

if c.TLSCertPath == "" && c.TLSKeyPath == "" && c.ClientAuth == "" && c.ClientCAs == "" {

|

||||

return nil, errNoTLSConfig

|

||||

}

|

||||

|

||||

if c.TLSCertPath == "" {

|

||||

return nil, errors.New("missing cert_file")

|

||||

}

|

||||

if c.TLSKeyPath == "" {

|

||||

return nil, errors.New("missing key_file")

|

||||

}

|

||||

cfg := &tls.Config{

|

||||

MinVersion: tls.VersionTLS12,

|

||||

}

|

||||

if len(c.TLSCertPath) == 0 {

|

||||

return nil, errors.New("missing TLSCertPath")

|

||||

}

|

||||

if len(c.TLSKeyPath) == 0 {

|

||||

return nil, errors.New("missing TLSKeyPath")

|

||||

}

|

||||

loadCert := func() (*tls.Certificate, error) {

|

||||

cert, err := tls.LoadX509KeyPair(c.TLSCertPath, c.TLSKeyPath)

|

||||

if err != nil {

|

||||

|

|

@ -74,7 +91,7 @@ func ConfigToTLSConfig(c *TLSStruct) (*tls.Config, error) {

|

|||

return loadCert()

|

||||

}

|

||||

|

||||

if len(c.ClientCAs) > 0 {

|

||||

if c.ClientCAs != "" {

|

||||

clientCAPool := x509.NewCertPool()

|

||||

clientCAFile, err := ioutil.ReadFile(c.ClientCAs)

|

||||

if err != nil {

|

||||

|

|

@ -83,40 +100,67 @@ func ConfigToTLSConfig(c *TLSStruct) (*tls.Config, error) {

|

|||

clientCAPool.AppendCertsFromPEM(clientCAFile)

|

||||

cfg.ClientCAs = clientCAPool

|

||||

}

|

||||

if len(c.ClientAuth) > 0 {

|

||||

switch s := (c.ClientAuth); s {

|

||||

case "NoClientCert":

|

||||

cfg.ClientAuth = tls.NoClientCert

|

||||

case "RequestClientCert":

|

||||

cfg.ClientAuth = tls.RequestClientCert

|

||||

case "RequireClientCert":

|

||||

cfg.ClientAuth = tls.RequireAnyClientCert

|

||||

case "VerifyClientCertIfGiven":

|

||||

cfg.ClientAuth = tls.VerifyClientCertIfGiven

|

||||

case "RequireAndVerifyClientCert":

|

||||

cfg.ClientAuth = tls.RequireAndVerifyClientCert

|

||||

case "":

|

||||

cfg.ClientAuth = tls.NoClientCert

|

||||

default:

|

||||

return nil, errors.New("Invalid ClientAuth: " + s)

|

||||

}

|

||||

|

||||

switch c.ClientAuth {

|

||||

case "RequestClientCert":

|

||||

cfg.ClientAuth = tls.RequestClientCert

|

||||

case "RequireClientCert":

|

||||

cfg.ClientAuth = tls.RequireAnyClientCert

|

||||

case "VerifyClientCertIfGiven":

|

||||

cfg.ClientAuth = tls.VerifyClientCertIfGiven

|

||||

case "RequireAndVerifyClientCert":

|

||||

cfg.ClientAuth = tls.RequireAndVerifyClientCert

|

||||

case "", "NoClientCert":

|

||||

cfg.ClientAuth = tls.NoClientCert

|

||||

default:

|

||||

return nil, errors.New("Invalid ClientAuth: " + c.ClientAuth)

|

||||

}

|

||||

if len(c.ClientCAs) > 0 && cfg.ClientAuth == tls.NoClientCert {

|

||||

|

||||

if c.ClientCAs != "" && cfg.ClientAuth == tls.NoClientCert {

|

||||

return nil, errors.New("Client CA's have been configured without a Client Auth Policy")

|

||||

}

|

||||

|

||||

return cfg, nil

|

||||

}

|

||||

|

||||

// Listen starts the server on the given address. If tlsConfigPath isn't empty the server connection will be started using TLS.

|

||||

func Listen(server *http.Server, tlsConfigPath string) error {

|

||||

if (tlsConfigPath) == "" {

|

||||

func Listen(server *http.Server, tlsConfigPath string, logger log.Logger) error {

|

||||

if tlsConfigPath == "" {

|

||||

level.Info(logger).Log("msg", "TLS is disabled and it cannot be enabled on the fly.")

|

||||

return server.ListenAndServe()

|

||||

}

|

||||

var err error

|

||||

server.TLSConfig, err = getTLSConfig(tlsConfigPath)

|

||||

if err != nil {

|

||||

|

||||

if err := validateUsers(tlsConfigPath); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

// Setup basic authentication.

|

||||

var handler http.Handler = http.DefaultServeMux

|

||||

if server.Handler != nil {

|

||||

handler = server.Handler

|

||||

}

|

||||

server.Handler = &userAuthRoundtrip{

|

||||

tlsConfigPath: tlsConfigPath,

|

||||

logger: logger,

|

||||

handler: handler,

|

||||

}

|

||||

|

||||

config, err := getTLSConfig(tlsConfigPath)

|

||||

switch err {

|

||||

case nil:

|

||||

// Valid TLS config.

|

||||

level.Info(logger).Log("msg", "TLS is enabled and it cannot be disabled on the fly.")

|

||||

case errNoTLSConfig:

|

||||

// No TLS config, back to plain HTTP.

|

||||

level.Info(logger).Log("msg", "TLS is disabled and it cannot be enabled on the fly.")

|

||||

return server.ListenAndServe()

|

||||

default:

|

||||

// Invalid TLS config.

|

||||

return err

|

||||

}

|

||||

|

||||

server.TLSConfig = config

|

||||

|

||||

// Set the GetConfigForClient method of the HTTPS server so that the config

|

||||

// and certs are reloaded on new connections.

|

||||

server.TLSConfig.GetConfigForClient = func(*tls.ClientHelloInfo) (*tls.Config, error) {

|

||||

|

|

|

|||

|

|

@ -28,7 +28,8 @@ import (

|

|||

)

|

||||

|

||||

var (

|

||||

port = getPort()

|

||||

port = getPort()

|

||||

testlogger = &testLogger{}

|

||||

|

||||

ErrorMap = map[string]*regexp.Regexp{

|

||||

"HTTP Response to HTTPS": regexp.MustCompile(`server gave HTTP response to HTTPS client`),

|

||||

|

|

@ -38,12 +39,21 @@ var (

|

|||

"Invalid ClientAuth": regexp.MustCompile(`invalid ClientAuth`),

|

||||

"TLS handshake": regexp.MustCompile(`tls`),

|

||||

"HTTP Request to HTTPS server": regexp.MustCompile(`HTTP`),

|

||||

"Invalid CertPath": regexp.MustCompile(`missing TLSCertPath`),

|

||||

"Invalid KeyPath": regexp.MustCompile(`missing TLSKeyPath`),

|

||||

"Invalid CertPath": regexp.MustCompile(`missing cert_file`),

|

||||

"Invalid KeyPath": regexp.MustCompile(`missing key_file`),

|

||||

"ClientCA set without policy": regexp.MustCompile(`Client CA's have been configured without a Client Auth Policy`),

|

||||

"Bad password": regexp.MustCompile(`hashedSecret too short to be a bcrypted password`),

|

||||

"Unauthorized": regexp.MustCompile(`Unauthorized`),

|

||||

"Forbidden": regexp.MustCompile(`Forbidden`),

|

||||

}

|

||||

)

|

||||

|

||||

type testLogger struct{}

|

||||

|

||||

func (t *testLogger) Log(keyvals ...interface{}) error {

|

||||

return nil

|

||||

}

|

||||

|

||||

func getPort() string {

|

||||

listener, err := net.Listen("tcp", ":0")

|

||||

if err != nil {

|

||||

|

|

@ -61,6 +71,8 @@ type TestInputs struct {

|

|||

YAMLConfigPath string

|

||||

ExpectedError *regexp.Regexp

|

||||

UseTLSClient bool

|

||||

Username string

|

||||

Password string

|

||||

}

|

||||

|

||||

func TestYAMLFiles(t *testing.T) {

|

||||

|

|

@ -73,13 +85,18 @@ func TestYAMLFiles(t *testing.T) {

|

|||

{

|

||||

Name: `empty config yml`,

|

||||

YAMLConfigPath: "testdata/tls_config_empty.yml",

|

||||

ExpectedError: ErrorMap["Invalid CertPath"],

|

||||

ExpectedError: nil,

|

||||

},

|

||||

{

|

||||

Name: `invalid config yml (invalid structure)`,

|

||||

YAMLConfigPath: "testdata/tls_config_junk.yml",

|

||||

ExpectedError: ErrorMap["YAML error"],

|

||||

},

|

||||

{

|

||||

Name: `invalid config yml (invalid key)`,

|

||||

YAMLConfigPath: "testdata/tls_config_junk_key.yml",

|

||||

ExpectedError: ErrorMap["YAML error"],

|

||||

},

|

||||

{

|

||||

Name: `invalid config yml (cert path empty)`,

|

||||

YAMLConfigPath: "testdata/tls_config_noAuth_certPath_empty.bad.yml",

|

||||

|

|

@ -120,6 +137,11 @@ func TestYAMLFiles(t *testing.T) {

|

|||

YAMLConfigPath: "testdata/tls_config_auth_clientCAs_invalid.bad.yml",

|

||||

ExpectedError: ErrorMap["No such file"],

|

||||

},

|

||||

{

|

||||

Name: `invalid config yml (invalid user list)`,

|

||||

YAMLConfigPath: "testdata/tls_config_auth_user_list_invalid.bad.yml",

|

||||

ExpectedError: ErrorMap["Bad password"],

|

||||

},

|

||||

}

|

||||

for _, testInputs := range testTables {

|

||||

t.Run(testInputs.Name, testInputs.Test)

|

||||

|

|

@ -189,7 +211,7 @@ func TestConfigReloading(t *testing.T) {

|

|||

recordConnectionError(errors.New("Panic starting server"))

|

||||

}

|

||||

}()

|

||||

err := Listen(server, badYAMLPath)

|

||||

err := Listen(server, badYAMLPath, testlogger)

|

||||

recordConnectionError(err)

|

||||

}()

|

||||

|

||||

|

|

@ -266,21 +288,28 @@ func (test *TestInputs) Test(t *testing.T) {

|

|||

recordConnectionError(errors.New("Panic starting server"))

|

||||

}

|

||||

}()

|

||||

err := Listen(server, test.YAMLConfigPath)

|

||||

err := Listen(server, test.YAMLConfigPath, testlogger)

|

||||

recordConnectionError(err)

|

||||

}()

|

||||

|

||||

var ClientConnection func() (*http.Response, error)

|

||||

if test.UseTLSClient {

|

||||

ClientConnection = func() (*http.Response, error) {

|

||||

client := getTLSClient()

|

||||

return client.Get("https://localhost" + port)

|

||||

ClientConnection := func() (*http.Response, error) {

|

||||

var client *http.Client

|

||||

var proto string

|

||||

if test.UseTLSClient {

|

||||

client = getTLSClient()

|

||||

proto = "https"

|

||||

} else {

|

||||

client = http.DefaultClient

|

||||

proto = "http"

|

||||

}

|

||||

} else {

|

||||

ClientConnection = func() (*http.Response, error) {

|

||||

client := http.DefaultClient

|

||||

return client.Get("http://localhost" + port)

|

||||

req, err := http.NewRequest("GET", proto+"://localhost"+port, nil)

|

||||

if err != nil {

|

||||

t.Error(err)

|

||||

}

|

||||

if test.Username != "" {

|

||||

req.SetBasicAuth(test.Username, test.Password)

|

||||

}

|

||||

return client.Do(req)

|

||||

}

|

||||

go func() {

|

||||

time.Sleep(250 * time.Millisecond)

|

||||

|

|

@ -360,3 +389,61 @@ func swapFileContents(file1, file2 string) error {

|

|||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

func TestUsers(t *testing.T) {

|

||||

testTables := []*TestInputs{

|

||||

{

|

||||

Name: `without basic auth`,

|

||||

YAMLConfigPath: "testdata/tls_config_users_noTLS.good.yml",

|

||||

ExpectedError: ErrorMap["Unauthorized"],

|

||||

},

|

||||

{

|

||||

Name: `with correct basic auth`,

|

||||

YAMLConfigPath: "testdata/tls_config_users_noTLS.good.yml",

|

||||

Username: "dave",

|

||||

Password: "dave123",

|

||||

ExpectedError: nil,

|

||||

},

|

||||

{

|

||||

Name: `without basic auth and TLS`,

|

||||

YAMLConfigPath: "testdata/tls_config_users.good.yml",

|

||||

UseTLSClient: true,

|

||||

ExpectedError: ErrorMap["Unauthorized"],

|

||||

},

|

||||

{

|

||||

Name: `with correct basic auth and TLS`,

|

||||

YAMLConfigPath: "testdata/tls_config_users.good.yml",

|

||||

UseTLSClient: true,

|

||||

Username: "dave",

|

||||

Password: "dave123",

|

||||

ExpectedError: nil,

|

||||

},

|

||||

{

|

||||

Name: `with another correct basic auth and TLS`,

|

||||

YAMLConfigPath: "testdata/tls_config_users.good.yml",

|

||||

UseTLSClient: true,

|

||||

Username: "carol",

|

||||

Password: "carol123",

|

||||

ExpectedError: nil,

|

||||

},

|

||||

{

|

||||

Name: `with bad password and TLS`,

|

||||

YAMLConfigPath: "testdata/tls_config_users.good.yml",

|

||||

UseTLSClient: true,

|

||||

Username: "dave",

|

||||

Password: "bad",

|

||||

ExpectedError: ErrorMap["Forbidden"],

|

||||

},

|

||||

{

|

||||

Name: `with bad username and TLS`,

|

||||

YAMLConfigPath: "testdata/tls_config_users.good.yml",

|

||||

UseTLSClient: true,

|

||||

Username: "nonexistent",

|

||||

Password: "nonexistent",

|

||||

ExpectedError: ErrorMap["Forbidden"],

|

||||

},

|

||||

}

|

||||

for _, testInputs := range testTables {

|

||||

t.Run(testInputs.Name, testInputs.Test)

|

||||

}

|

||||

}

|

||||

|

|

|

|||

73

https/users.go

Normal file

73

https/users.go

Normal file

|

|

@ -0,0 +1,73 @@

|

|||

// Copyright 2020 The Prometheus Authors

|

||||

// Licensed under the Apache License, Version 2.0 (the "License");

|

||||

// you may not use this file except in compliance with the License.

|

||||

// You may obtain a copy of the License at

|

||||

//

|

||||

// http://www.apache.org/licenses/LICENSE-2.0

|

||||

//

|

||||

// Unless required by applicable law or agreed to in writing, software

|

||||

// distributed under the License is distributed on an "AS IS" BASIS,

|

||||

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

// See the License for the specific language governing permissions and

|

||||

// limitations under the License.

|

||||

|

||||

package https

|

||||

|

||||

import (

|

||||

"net/http"

|

||||

|

||||

"github.com/go-kit/kit/log"

|

||||

"golang.org/x/crypto/bcrypt"

|

||||

)

|

||||

|

||||

func validateUsers(configPath string) error {

|

||||

c, err := getConfig(configPath)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

for _, p := range c.Users {

|

||||

_, err = bcrypt.Cost([]byte(p))

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

}

|

||||

|

||||

return nil

|

||||

}

|

||||

|

||||

type userAuthRoundtrip struct {

|

||||

tlsConfigPath string

|

||||

handler http.Handler

|

||||

logger log.Logger

|

||||

}

|

||||

|

||||

func (u *userAuthRoundtrip) ServeHTTP(w http.ResponseWriter, r *http.Request) {

|

||||

c, err := getConfig(u.tlsConfigPath)

|

||||

if err != nil {

|

||||

u.logger.Log("msg", "Unable to parse configuration", "err", err)

|

||||

http.Error(w, http.StatusText(http.StatusInternalServerError), http.StatusInternalServerError)

|

||||

return

|

||||

}

|

||||

|

||||

if len(c.Users) == 0 {

|

||||

u.handler.ServeHTTP(w, r)

|

||||

return

|

||||

}

|

||||

|

||||

user, pass, ok := r.BasicAuth()

|

||||

if !ok {

|

||||

w.Header().Set("WWW-Authenticate", "Basic")

|

||||

http.Error(w, http.StatusText(http.StatusUnauthorized), http.StatusUnauthorized)

|

||||

return

|

||||

}

|

||||

|

||||

if hashedPassword, ok := c.Users[user]; ok {

|

||||

if err := bcrypt.CompareHashAndPassword([]byte(hashedPassword), []byte(pass)); err == nil {

|

||||

u.handler.ServeHTTP(w, r)

|

||||

return

|

||||

}

|

||||

}

|

||||

|

||||

http.Error(w, http.StatusText(http.StatusForbidden), http.StatusForbidden)

|

||||

}

|

||||

|

|

@ -15,13 +15,14 @@ package main

|

|||

|

||||

import (

|

||||

"fmt"

|

||||

"github.com/prometheus/common/promlog"

|

||||

"github.com/prometheus/common/promlog/flag"

|

||||

"net/http"

|

||||

_ "net/http/pprof"

|

||||

"os"

|

||||

"sort"

|

||||

|

||||

"github.com/prometheus/common/promlog"

|

||||

"github.com/prometheus/common/promlog/flag"

|

||||

|

||||

"github.com/go-kit/kit/log"

|

||||

"github.com/go-kit/kit/log/level"

|

||||

"github.com/prometheus/client_golang/prometheus"

|

||||

|

|

@ -189,7 +190,7 @@ func main() {

|

|||

|

||||

level.Info(logger).Log("msg", "Listening on", "address", *listenAddress)

|

||||

server := &http.Server{Addr: *listenAddress}

|

||||

if err := https.Listen(server, *configFile); err != nil {

|

||||

if err := https.Listen(server, *configFile, logger); err != nil {

|

||||

level.Error(logger).Log("err", err)

|

||||

os.Exit(1)

|

||||

}

|

||||

|

|

|

|||

163

vendor/github.com/mwitkow/go-conntrack/.gitignore

generated

vendored

Normal file

163

vendor/github.com/mwitkow/go-conntrack/.gitignore

generated

vendored

Normal file

|

|

@ -0,0 +1,163 @@

|

|||

# Created by .ignore support plugin (hsz.mobi)

|

||||

### JetBrains template

|

||||

# Covers JetBrains IDEs: IntelliJ, RubyMine, PhpStorm, AppCode, PyCharm, CLion, Android Studio and Webstorm

|

||||

# Reference: https://intellij-support.jetbrains.com/hc/en-us/articles/206544839

|

||||

|

||||

# User-specific stuff:

|

||||

.idea

|

||||

.idea/workspace.xml

|

||||

.idea/tasks.xml

|

||||

.idea/dictionaries

|

||||

.idea/vcs.xml

|

||||

.idea/jsLibraryMappings.xml

|

||||

|

||||

# Sensitive or high-churn files:

|

||||

.idea/dataSources.ids

|

||||

.idea/dataSources.xml

|

||||

.idea/dataSources.local.xml

|

||||

.idea/sqlDataSources.xml

|

||||

.idea/dynamic.xml

|

||||

.idea/uiDesigner.xml

|

||||

|

||||

# Gradle:

|

||||

.idea/gradle.xml

|

||||

.idea/libraries

|

||||

|

||||

# Mongo Explorer plugin:

|

||||

.idea/mongoSettings.xml

|

||||

|

||||

## File-based project format:

|

||||

*.iws

|

||||

|

||||

## Plugin-specific files:

|

||||

|

||||

# IntelliJ

|

||||

/out/

|

||||

|

||||

# mpeltonen/sbt-idea plugin

|

||||

.idea_modules/

|

||||

|

||||

# JIRA plugin

|

||||

atlassian-ide-plugin.xml

|

||||

|

||||

# Crashlytics plugin (for Android Studio and IntelliJ)

|

||||

com_crashlytics_export_strings.xml

|

||||

crashlytics.properties

|

||||

crashlytics-build.properties

|

||||

fabric.properties

|

||||

### Go template

|

||||

# Compiled Object files, Static and Dynamic libs (Shared Objects)

|

||||

*.o

|

||||

*.a

|

||||

*.so

|

||||

|

||||

# Folders

|

||||

_obj

|

||||

_test

|

||||

|

||||

# Architecture specific extensions/prefixes

|

||||

*.[568vq]

|

||||

[568vq].out

|

||||

|

||||

*.cgo1.go

|

||||

*.cgo2.c

|

||||

_cgo_defun.c

|

||||

_cgo_gotypes.go

|

||||

_cgo_export.*

|

||||

|

||||

_testmain.go

|

||||

|

||||

*.exe

|

||||

*.test

|

||||

*.prof

|

||||

### Python template

|

||||

# Byte-compiled / optimized / DLL files

|

||||

__pycache__/

|

||||

*.py[cod]

|

||||

*$py.class

|

||||

|

||||

# C extensions

|

||||

*.so

|

||||

|

||||

# Distribution / packaging

|

||||

.Python

|

||||

env/

|

||||

build/

|

||||

develop-eggs/

|

||||

dist/

|

||||

downloads/

|

||||

eggs/

|

||||

.eggs/

|

||||

lib/

|

||||

lib64/

|

||||

parts/

|

||||

sdist/

|

||||

var/

|

||||

*.egg-info/

|

||||

.installed.cfg

|

||||

*.egg

|

||||

|

||||

# PyInstaller

|

||||

# Usually these files are written by a python script from a template

|

||||

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

||||

*.manifest

|

||||

*.spec

|

||||

|

||||

# Installer logs

|

||||

pip-log.txt

|

||||

pip-delete-this-directory.txt

|

||||

|

||||

# Unit test / coverage reports

|

||||

htmlcov/

|

||||

.tox/

|

||||

.coverage

|

||||

.coverage.*

|

||||

.cache

|

||||

nosetests.xml

|

||||

coverage.xml

|

||||

*,cover

|

||||

.hypothesis/

|

||||

|

||||

# Translations

|

||||

*.mo

|

||||

*.pot

|

||||

|

||||

# Django stuff:

|

||||

*.log

|

||||

local_settings.py

|

||||

|

||||

# Flask stuff:

|

||||

instance/

|

||||

.webassets-cache

|

||||

|

||||

# Scrapy stuff:

|

||||

.scrapy

|

||||

|

||||

# Sphinx documentation

|

||||

docs/_build/

|

||||

|

||||

# PyBuilder

|

||||

target/

|

||||

|

||||

# IPython Notebook

|

||||

.ipynb_checkpoints

|

||||

|

||||

# pyenv

|

||||

.python-version

|

||||

|

||||

# celery beat schedule file

|

||||

celerybeat-schedule

|

||||

|

||||

# dotenv

|

||||

.env

|

||||

|

||||

# virtualenv

|

||||

venv/

|

||||

ENV/

|

||||

|

||||

# Spyder project settings

|

||||

.spyderproject

|

||||

|

||||

# Rope project settings

|

||||

.ropeproject

|

||||

|

||||

13

vendor/github.com/mwitkow/go-conntrack/.travis.yml

generated

vendored

Normal file

13

vendor/github.com/mwitkow/go-conntrack/.travis.yml

generated

vendored

Normal file

|

|

@ -0,0 +1,13 @@

|

|||

sudo: false

|

||||

language: go

|

||||

go:

|

||||

- 1.7

|

||||

|

||||

install:

|

||||

- go get github.com/stretchr/testify

|

||||

- go get github.com/prometheus/client_golang/prometheus

|

||||

- go get golang.org/x/net/context

|

||||

- go get golang.org/x/net/trace

|

||||

|

||||

script:

|

||||

- go test -v ./...

|

||||

201

vendor/github.com/mwitkow/go-conntrack/LICENSE

generated

vendored

Normal file

201

vendor/github.com/mwitkow/go-conntrack/LICENSE

generated

vendored

Normal file

|

|

@ -0,0 +1,201 @@

|

|||

Apache License

|

||||

Version 2.0, January 2004

|

||||

http://www.apache.org/licenses/

|

||||

|

||||

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

||||

|

||||

1. Definitions.

|

||||

|

||||

"License" shall mean the terms and conditions for use, reproduction,

|

||||

and distribution as defined by Sections 1 through 9 of this document.

|

||||

|

||||

"Licensor" shall mean the copyright owner or entity authorized by

|

||||

the copyright owner that is granting the License.

|

||||

|

||||

"Legal Entity" shall mean the union of the acting entity and all

|

||||

other entities that control, are controlled by, or are under common

|

||||

control with that entity. For the purposes of this definition,

|

||||

"control" means (i) the power, direct or indirect, to cause the

|

||||

direction or management of such entity, whether by contract or

|

||||

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

||||

outstanding shares, or (iii) beneficial ownership of such entity.

|

||||

|

||||

"You" (or "Your") shall mean an individual or Legal Entity

|

||||

exercising permissions granted by this License.

|

||||

|

||||

"Source" form shall mean the preferred form for making modifications,

|

||||

including but not limited to software source code, documentation

|

||||

source, and configuration files.

|

||||

|

||||

"Object" form shall mean any form resulting from mechanical

|

||||

transformation or translation of a Source form, including but

|

||||

not limited to compiled object code, generated documentation,

|

||||

and conversions to other media types.

|

||||

|

||||

"Work" shall mean the work of authorship, whether in Source or

|

||||

Object form, made available under the License, as indicated by a

|

||||

copyright notice that is included in or attached to the work

|

||||

(an example is provided in the Appendix below).

|

||||

|

||||

"Derivative Works" shall mean any work, whether in Source or Object

|

||||

form, that is based on (or derived from) the Work and for which the

|

||||

editorial revisions, annotations, elaborations, or other modifications

|

||||

represent, as a whole, an original work of authorship. For the purposes

|

||||

of this License, Derivative Works shall not include works that remain

|

||||

separable from, or merely link (or bind by name) to the interfaces of,

|

||||

the Work and Derivative Works thereof.

|

||||

|

||||

"Contribution" shall mean any work of authorship, including

|

||||

the original version of the Work and any modifications or additions

|

||||

to that Work or Derivative Works thereof, that is intentionally

|

||||

submitted to Licensor for inclusion in the Work by the copyright owner

|

||||

or by an individual or Legal Entity authorized to submit on behalf of

|

||||

the copyright owner. For the purposes of this definition, "submitted"

|

||||

means any form of electronic, verbal, or written communication sent

|

||||

to the Licensor or its representatives, including but not limited to

|

||||

communication on electronic mailing lists, source code control systems,

|

||||

and issue tracking systems that are managed by, or on behalf of, the

|

||||

Licensor for the purpose of discussing and improving the Work, but

|

||||

excluding communication that is conspicuously marked or otherwise

|

||||

designated in writing by the copyright owner as "Not a Contribution."

|

||||

|

||||

"Contributor" shall mean Licensor and any individual or Legal Entity

|

||||

on behalf of whom a Contribution has been received by Licensor and

|

||||

subsequently incorporated within the Work.

|

||||

|

||||

2. Grant of Copyright License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

copyright license to reproduce, prepare Derivative Works of,

|

||||

publicly display, publicly perform, sublicense, and distribute the

|

||||

Work and such Derivative Works in Source or Object form.

|

||||

|

||||

3. Grant of Patent License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

(except as stated in this section) patent license to make, have made,

|

||||

use, offer to sell, sell, import, and otherwise transfer the Work,

|

||||

where such license applies only to those patent claims licensable

|

||||

by such Contributor that are necessarily infringed by their

|

||||

Contribution(s) alone or by combination of their Contribution(s)

|

||||

with the Work to which such Contribution(s) was submitted. If You

|

||||

institute patent litigation against any entity (including a

|

||||

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

||||

or a Contribution incorporated within the Work constitutes direct

|

||||

or contributory patent infringement, then any patent licenses

|

||||

granted to You under this License for that Work shall terminate

|

||||

as of the date such litigation is filed.

|

||||

|

||||

4. Redistribution. You may reproduce and distribute copies of the

|

||||

Work or Derivative Works thereof in any medium, with or without

|

||||

modifications, and in Source or Object form, provided that You

|

||||

meet the following conditions:

|

||||

|

||||

(a) You must give any other recipients of the Work or

|

||||

Derivative Works a copy of this License; and

|

||||

|

||||

(b) You must cause any modified files to carry prominent notices

|

||||

stating that You changed the files; and

|

||||

|

||||

(c) You must retain, in the Source form of any Derivative Works

|

||||

that You distribute, all copyright, patent, trademark, and

|

||||

attribution notices from the Source form of the Work,

|

||||

excluding those notices that do not pertain to any part of

|

||||

the Derivative Works; and

|

||||

|

||||

(d) If the Work includes a "NOTICE" text file as part of its

|

||||

distribution, then any Derivative Works that You distribute must

|

||||

include a readable copy of the attribution notices contained

|

||||

within such NOTICE file, excluding those notices that do not

|

||||

pertain to any part of the Derivative Works, in at least one

|

||||

of the following places: within a NOTICE text file distributed

|

||||

as part of the Derivative Works; within the Source form or

|

||||

documentation, if provided along with the Derivative Works; or,

|

||||

within a display generated by the Derivative Works, if and

|

||||

wherever such third-party notices normally appear. The contents

|

||||

of the NOTICE file are for informational purposes only and

|

||||

do not modify the License. You may add Your own attribution

|

||||

notices within Derivative Works that You distribute, alongside

|

||||

or as an addendum to the NOTICE text from the Work, provided

|

||||

that such additional attribution notices cannot be construed

|

||||

as modifying the License.

|

||||

|

||||

You may add Your own copyright statement to Your modifications and

|

||||

may provide additional or different license terms and conditions

|

||||

for use, reproduction, or distribution of Your modifications, or

|

||||

for any such Derivative Works as a whole, provided Your use,

|

||||

reproduction, and distribution of the Work otherwise complies with

|

||||

the conditions stated in this License.

|

||||

|

||||

5. Submission of Contributions. Unless You explicitly state otherwise,

|

||||

any Contribution intentionally submitted for inclusion in the Work

|

||||

by You to the Licensor shall be under the terms and conditions of

|

||||

this License, without any additional terms or conditions.

|

||||

Notwithstanding the above, nothing herein shall supersede or modify

|

||||

the terms of any separate license agreement you may have executed

|

||||

with Licensor regarding such Contributions.

|

||||

|

||||

6. Trademarks. This License does not grant permission to use the trade

|

||||

names, trademarks, service marks, or product names of the Licensor,

|

||||

except as required for reasonable and customary use in describing the

|

||||

origin of the Work and reproducing the content of the NOTICE file.

|

||||

|

||||

7. Disclaimer of Warranty. Unless required by applicable law or

|

||||

agreed to in writing, Licensor provides the Work (and each

|

||||

Contributor provides its Contributions) on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

||||

implied, including, without limitation, any warranties or conditions

|

||||

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

||||

PARTICULAR PURPOSE. You are solely responsible for determining the

|

||||

appropriateness of using or redistributing the Work and assume any

|

||||

risks associated with Your exercise of permissions under this License.

|

||||

|

||||

8. Limitation of Liability. In no event and under no legal theory,

|

||||

whether in tort (including negligence), contract, or otherwise,

|

||||

unless required by applicable law (such as deliberate and grossly

|

||||

negligent acts) or agreed to in writing, shall any Contributor be

|

||||

liable to You for damages, including any direct, indirect, special,

|

||||

incidental, or consequential damages of any character arising as a

|

||||

result of this License or out of the use or inability to use the

|

||||

Work (including but not limited to damages for loss of goodwill,

|

||||

work stoppage, computer failure or malfunction, or any and all

|

||||

other commercial damages or losses), even if such Contributor

|

||||

has been advised of the possibility of such damages.

|

||||

|

||||

9. Accepting Warranty or Additional Liability. While redistributing

|

||||

the Work or Derivative Works thereof, You may choose to offer,

|

||||

and charge a fee for, acceptance of support, warranty, indemnity,

|

||||

or other liability obligations and/or rights consistent with this

|

||||

License. However, in accepting such obligations, You may act only

|

||||

on Your own behalf and on Your sole responsibility, not on behalf

|

||||

of any other Contributor, and only if You agree to indemnify,

|

||||

defend, and hold each Contributor harmless for any liability

|

||||

incurred by, or claims asserted against, such Contributor by reason

|

||||

of your accepting any such warranty or additional liability.

|

||||

|

||||

END OF TERMS AND CONDITIONS

|

||||

|

||||

APPENDIX: How to apply the Apache License to your work.

|

||||

|

||||

To apply the Apache License to your work, attach the following

|

||||

boilerplate notice, with the fields enclosed by brackets "{}"

|

||||

replaced with your own identifying information. (Don't include

|

||||

the brackets!) The text should be enclosed in the appropriate

|

||||

comment syntax for the file format. We also recommend that a

|

||||

file or class name and description of purpose be included on the

|

||||

same "printed page" as the copyright notice for easier

|

||||

identification within third-party archives.

|

||||

|

||||

Copyright {yyyy} {name of copyright owner}

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

88

vendor/github.com/mwitkow/go-conntrack/README.md

generated

vendored

Normal file

88

vendor/github.com/mwitkow/go-conntrack/README.md

generated

vendored

Normal file

|

|

@ -0,0 +1,88 @@

|

|||

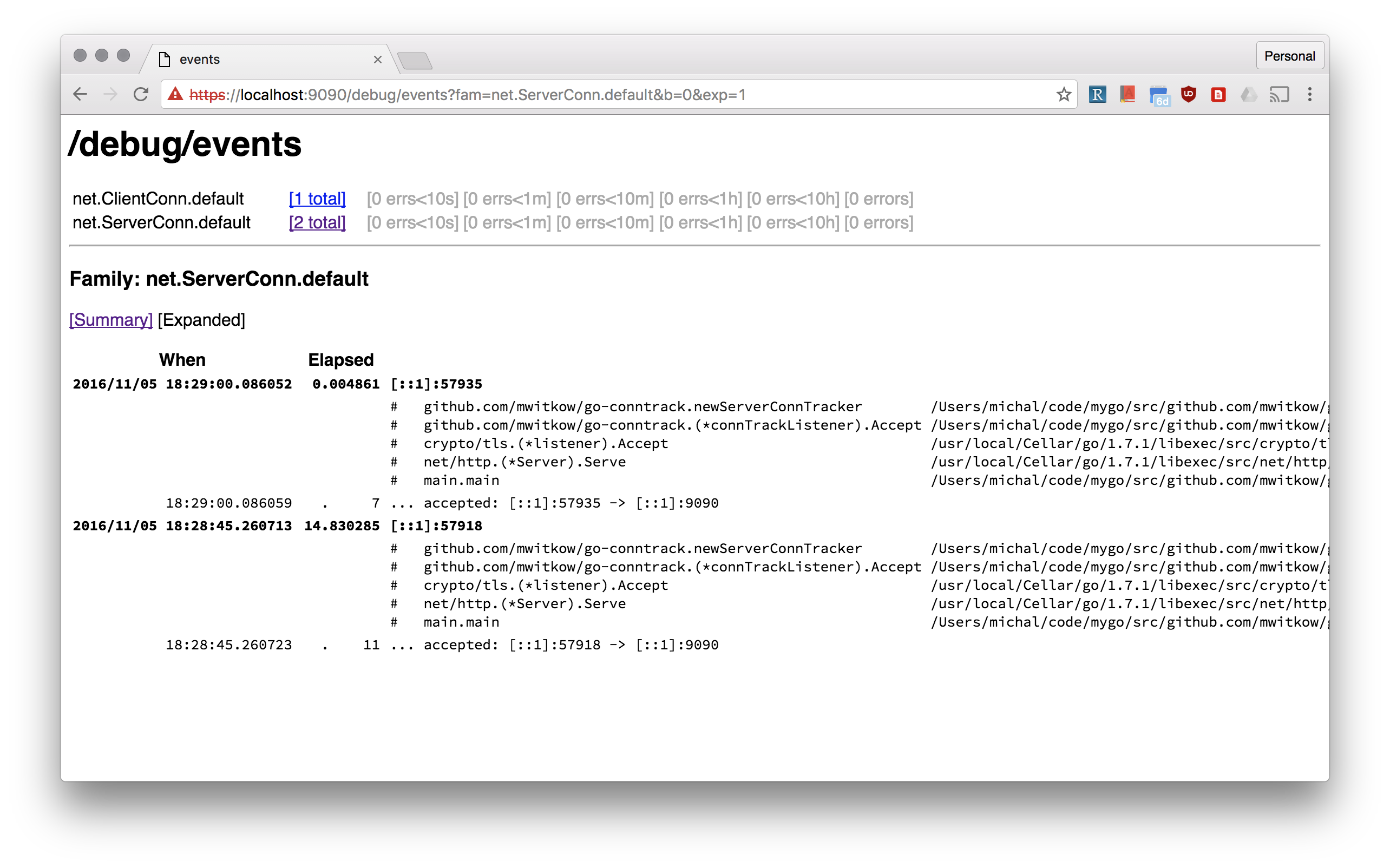

# Go tracing and monitoring (Prometheus) for `net.Conn`

|

||||

|

||||

[](https://travis-ci.org/mwitkow/go-conntrack)

|

||||

[](http://goreportcard.com/report/mwitkow/go-conntrack)

|

||||

[](https://godoc.org/github.com/mwitkow/go-conntrack)

|

||||

[](LICENSE)

|

||||

|

||||

[Prometheus](https://prometheus.io/) monitoring and [`x/net/trace`](https://godoc.org/golang.org/x/net/trace#EventLog) tracing wrappers `net.Conn`, both inbound (`net.Listener`) and outbound (`net.Dialer`).

|

||||

|

||||

## Why?

|

||||

|

||||

Go standard library does a great job of doing "the right" things with your connections: `http.Transport` pools outbound ones, and `http.Server` sets good *Keep Alive* defaults.

|

||||

However, it is still easy to get it wrong, see the excellent [*The complete guide to Go net/http timeouts*](https://blog.cloudflare.com/the-complete-guide-to-golang-net-http-timeouts/).

|

||||

|

||||

That's why you should be able to monitor (using Prometheus) how many connections your Go frontend servers have inbound, and how big are the connection pools to your backends. You should also be able to inspect your connection without `ssh` and `netstat`.

|

||||

|

||||

|

||||

|

||||

## How to use?

|

||||

|

||||

All of these examples can be found in [`example/server.go`](example/server.go):

|

||||

|

||||

### Conntrack Dialer for HTTP DefaultClient

|

||||

|

||||

Most often people use the default `http.DefaultClient` that uses `http.DefaultTransport`. The easiest way to make sure all your outbound connections monitored and trace is:

|

||||

|

||||

```go

|

||||

http.DefaultTransport.(*http.Transport).DialContext = conntrack.NewDialContextFunc(

|

||||

conntrack.DialWithTracing(),

|

||||

conntrack.DialWithDialer(&net.Dialer{

|

||||

Timeout: 30 * time.Second,

|

||||

KeepAlive: 30 * time.Second,

|

||||

}),

|

||||

)

|

||||

```

|

||||

|

||||

#### Dialer Name

|

||||

|

||||

Tracked outbound connections are organised by *dialer name* (with `default` being default). The *dialer name* is used for monitoring (`dialer_name` label) and tracing (`net.ClientConn.<dialer_name>` family).

|

||||

|

||||

You can pass `conntrack.WithDialerName()` to `NewDialContextFunc` to set the name for the dialer. Moreover, you can set the *dialer name* per invocation of the dialer, by passing it in the `Context`. For example using the [`ctxhttp`](https://godoc.org/golang.org/x/net/context/ctxhttp) lib:

|

||||

|

||||

```go

|

||||

callCtx := conntrack.DialNameToContext(parentCtx, "google")

|

||||

ctxhttp.Get(callCtx, http.DefaultClient, "https://www.google.com")

|

||||

```

|

||||

|

||||

### Conntrack Listener for HTTP Server

|

||||

|

||||

Tracked inbound connections are organised by *listener name* (with `default` being default). The *listener name* is used for monitoring (`listener_name` label) and tracing (`net.ServerConn.<listener_name>` family). For example, a simple `http.Server` can be instrumented like this:

|

||||

|

||||

```go

|

||||

listener, err := net.Listen("tcp", fmt.Sprintf(":%d", *port))

|

||||

listener = conntrack.NewListener(listener,

|

||||

conntrack.TrackWithName("http"),

|

||||

conntrack.TrackWithTracing(),

|

||||

conntrack.TrackWithTcpKeepAlive(5 * time.Minutes))

|

||||

httpServer.Serve(listener)

|

||||

```

|

||||

|

||||

Note, the `TrackWithTcpKeepAlive`. The default `http.ListenAndServe` adds a tcp keep alive wrapper to inbound TCP connections. `conntrack.NewListener` allows you to do that without another layer of wrapping.

|

||||

|

||||

#### TLS server example

|

||||

|

||||

The standard lobrary `http.ListenAndServerTLS` does a lot to bootstrap TLS connections, including supporting HTTP2 negotiation. Unfortunately, that is hard to do if you want to provide your own `net.Listener`. That's why this repo comes with `connhelpers` package, which takes care of configuring `tls.Config` for that use case. Here's an example of use:

|

||||

|

||||

```go

|

||||

listener, err := net.Listen("tcp", fmt.Sprintf(":%d", *port))

|

||||

listener = conntrack.NewListener(listener,

|

||||

conntrack.TrackWithName("https"),

|

||||

conntrack.TrackWithTracing(),

|

||||

conntrack.TrackWithTcpKeepAlive(5 * time.Minutes))

|

||||

tlsConfig, err := connhelpers.TlsConfigForServerCerts(*tlsCertFilePath, *tlsKeyFilePath)

|

||||

tlsConfig, err = connhelpers.TlsConfigWithHttp2Enabled(tlsConfig)

|

||||

tlsListener := tls.NewListener(listener, tlsConfig)

|

||||

httpServer.Serve(listener)

|

||||

```

|

||||

|

||||

# Status

|

||||

|

||||

This code is used by Improbable's HTTP frontending and proxying stack for debuging and monitoring of established user connections.

|

||||

|

||||

Additional tooling will be added if needed, and contributions are welcome.

|

||||

|

||||

#License

|

||||

|

||||

`go-conntrack` is released under the Apache 2.0 license. See the [LICENSE](LICENSE) file for details.

|

||||

|

||||

108

vendor/github.com/mwitkow/go-conntrack/dialer_reporter.go

generated

vendored

Normal file

108

vendor/github.com/mwitkow/go-conntrack/dialer_reporter.go

generated

vendored

Normal file

|

|

@ -0,0 +1,108 @@

|

|||

// Copyright 2016 Michal Witkowski. All Rights Reserved.

|

||||

// See LICENSE for licensing terms.

|

||||

|

||||

package conntrack

|

||||

|

||||

import (

|

||||

"context"

|

||||

"net"

|

||||

"os"

|

||||

"syscall"

|

||||

|

||||

prom "github.com/prometheus/client_golang/prometheus"

|

||||

)

|

||||

|

||||

type failureReason string

|

||||

|

||||

const (

|

||||

failedResolution = "resolution"

|

||||

failedConnRefused = "refused"

|

||||

failedTimeout = "timeout"

|

||||

failedUnknown = "unknown"

|

||||

)

|

||||

|

||||

var (

|

||||

dialerAttemptedTotal = prom.NewCounterVec(

|

||||

prom.CounterOpts{

|

||||

Namespace: "net",

|

||||

Subsystem: "conntrack",

|

||||

Name: "dialer_conn_attempted_total",

|

||||

Help: "Total number of connections attempted by the given dialer a given name.",

|

||||

}, []string{"dialer_name"})

|

||||

|

||||

dialerConnEstablishedTotal = prom.NewCounterVec(

|

||||

prom.CounterOpts{

|

||||

Namespace: "net",

|

||||

Subsystem: "conntrack",

|

||||

Name: "dialer_conn_established_total",

|

||||

Help: "Total number of connections successfully established by the given dialer a given name.",

|

||||

}, []string{"dialer_name"})

|

||||

|

||||

dialerConnFailedTotal = prom.NewCounterVec(

|

||||

prom.CounterOpts{

|

||||

Namespace: "net",

|

||||

Subsystem: "conntrack",

|

||||

Name: "dialer_conn_failed_total",

|

||||

Help: "Total number of connections failed to dial by the dialer a given name.",

|

||||

}, []string{"dialer_name", "reason"})

|

||||

|

||||

dialerConnClosedTotal = prom.NewCounterVec(

|

||||

prom.CounterOpts{

|

||||

Namespace: "net",

|

||||

Subsystem: "conntrack",

|

||||

Name: "dialer_conn_closed_total",

|

||||

Help: "Total number of connections closed which originated from the dialer of a given name.",

|

||||

}, []string{"dialer_name"})

|

||||

)

|

||||

|

||||

func init() {

|

||||

prom.MustRegister(dialerAttemptedTotal)

|

||||

prom.MustRegister(dialerConnEstablishedTotal)

|

||||

prom.MustRegister(dialerConnFailedTotal)

|

||||

prom.MustRegister(dialerConnClosedTotal)

|

||||

}

|

||||

|

||||

// preRegisterDialerMetrics pre-populates Prometheus labels for the given dialer name, to avoid Prometheus missing labels issue.

|

||||

func PreRegisterDialerMetrics(dialerName string) {

|

||||

dialerAttemptedTotal.WithLabelValues(dialerName)

|

||||

dialerConnEstablishedTotal.WithLabelValues(dialerName)

|

||||

for _, reason := range []failureReason{failedTimeout, failedResolution, failedConnRefused, failedUnknown} {

|

||||

dialerConnFailedTotal.WithLabelValues(dialerName, string(reason))

|

||||

}

|

||||

dialerConnClosedTotal.WithLabelValues(dialerName)

|

||||

}

|

||||

|

||||

func reportDialerConnAttempt(dialerName string) {

|

||||

dialerAttemptedTotal.WithLabelValues(dialerName).Inc()

|

||||

}

|

||||

|

||||

func reportDialerConnEstablished(dialerName string) {

|

||||

dialerConnEstablishedTotal.WithLabelValues(dialerName).Inc()

|

||||

}

|

||||

|

||||

func reportDialerConnClosed(dialerName string) {

|

||||

dialerConnClosedTotal.WithLabelValues(dialerName).Inc()

|

||||

}

|

||||

|

||||

func reportDialerConnFailed(dialerName string, err error) {

|

||||

if netErr, ok := err.(*net.OpError); ok {

|

||||

switch nestErr := netErr.Err.(type) {

|

||||

case *net.DNSError:

|

||||

dialerConnFailedTotal.WithLabelValues(dialerName, string(failedResolution)).Inc()

|

||||

return

|

||||

case *os.SyscallError:

|

||||

if nestErr.Err == syscall.ECONNREFUSED {

|

||||

dialerConnFailedTotal.WithLabelValues(dialerName, string(failedConnRefused)).Inc()

|

||||

}

|

||||

dialerConnFailedTotal.WithLabelValues(dialerName, string(failedUnknown)).Inc()

|

||||

return

|

||||

}

|

||||

if netErr.Timeout() {

|

||||

dialerConnFailedTotal.WithLabelValues(dialerName, string(failedTimeout)).Inc()

|

||||

}

|

||||

} else if err == context.Canceled || err == context.DeadlineExceeded {

|

||||

dialerConnFailedTotal.WithLabelValues(dialerName, string(failedTimeout)).Inc()

|

||||

return

|

||||

}

|

||||

dialerConnFailedTotal.WithLabelValues(dialerName, string(failedUnknown)).Inc()

|

||||

}

|

||||

166

vendor/github.com/mwitkow/go-conntrack/dialer_wrapper.go

generated

vendored

Normal file

166

vendor/github.com/mwitkow/go-conntrack/dialer_wrapper.go

generated

vendored

Normal file

|

|

@ -0,0 +1,166 @@

|

|||

// Copyright 2016 Michal Witkowski. All Rights Reserved.

|

||||

// See LICENSE for licensing terms.

|

||||

|

||||

package conntrack

|

||||

|

||||

import (

|

||||

"context"

|

||||

"fmt"

|

||||

"net"

|

||||

"sync"

|

||||

|

||||

"golang.org/x/net/trace"

|

||||

)

|

||||

|

||||

var (

|

||||

dialerNameKey = "conntrackDialerKey"

|

||||

)

|

||||

|

||||

type dialerOpts struct {

|

||||

name string

|

||||

monitoring bool

|

||||

tracing bool

|

||||

parentDialContextFunc dialerContextFunc

|

||||

}

|

||||

|

||||

type dialerOpt func(*dialerOpts)

|

||||

|

||||

type dialerContextFunc func(context.Context, string, string) (net.Conn, error)

|

||||

|

||||

// DialWithName sets the name of the dialer for tracking and monitoring.

|

||||

// This is the name for the dialer (default is `default`), but for `NewDialContextFunc` can be overwritten from the

|

||||

// Context using `DialNameToContext`.

|

||||

func DialWithName(name string) dialerOpt {

|

||||

return func(opts *dialerOpts) {

|

||||

opts.name = name

|

||||

}

|

||||

}

|

||||

|

||||

// DialWithoutMonitoring turns *off* Prometheus monitoring for this dialer.

|

||||

func DialWithoutMonitoring() dialerOpt {

|

||||

return func(opts *dialerOpts) {

|

||||

opts.monitoring = false

|

||||

}

|

||||

}

|

||||

|

||||

// DialWithTracing turns *on* the /debug/events tracing of the dial calls.

|

||||

func DialWithTracing() dialerOpt {

|

||||

return func(opts *dialerOpts) {

|

||||

opts.tracing = true

|

||||

}

|

||||

}

|

||||

|

||||

// DialWithDialer allows you to override the `net.Dialer` instance used to actually conduct the dials.

|

||||

func DialWithDialer(parentDialer *net.Dialer) dialerOpt {

|

||||

return DialWithDialContextFunc(parentDialer.DialContext)

|

||||

}

|

||||

|

||||

// DialWithDialContextFunc allows you to override func gets used for the actual dialing. The default is `net.Dialer.DialContext`.

|

||||

func DialWithDialContextFunc(parentDialerFunc dialerContextFunc) dialerOpt {

|

||||

return func(opts *dialerOpts) {

|

||||

opts.parentDialContextFunc = parentDialerFunc

|

||||

}

|

||||

}

|

||||

|

||||

// DialNameFromContext returns the name of the dialer from the context of the DialContext func, if any.

|

||||

func DialNameFromContext(ctx context.Context) string {

|

||||

val, ok := ctx.Value(dialerNameKey).(string)

|

||||

if !ok {

|

||||

return ""

|

||||

}

|

||||

return val

|

||||

}

|

||||

|

||||

// DialNameToContext returns a context that will contain a dialer name override.

|

||||

func DialNameToContext(ctx context.Context, dialerName string) context.Context {

|

||||

return context.WithValue(ctx, dialerNameKey, dialerName)

|

||||

}

|

||||

|

||||

// NewDialContextFunc returns a `DialContext` function that tracks outbound connections.

|

||||

// The signature is compatible with `http.Tranport.DialContext` and is meant to be used there.

|

||||

func NewDialContextFunc(optFuncs ...dialerOpt) func(context.Context, string, string) (net.Conn, error) {

|

||||

opts := &dialerOpts{name: defaultName, monitoring: true, parentDialContextFunc: (&net.Dialer{}).DialContext}

|

||||

for _, f := range optFuncs {

|

||||

f(opts)

|

||||

}

|

||||

if opts.monitoring {

|

||||

PreRegisterDialerMetrics(opts.name)

|

||||

}

|

||||

return func(ctx context.Context, network string, addr string) (net.Conn, error) {

|

||||

name := opts.name

|

||||

if ctxName := DialNameFromContext(ctx); ctxName != "" {

|

||||

name = ctxName

|

||||

}

|

||||

return dialClientConnTracker(ctx, network, addr, name, opts)

|

||||

}

|

||||

}

|

||||

|

||||

// NewDialFunc returns a `Dial` function that tracks outbound connections.

|

||||

// The signature is compatible with `http.Tranport.Dial` and is meant to be used there for Go < 1.7.

|

||||

func NewDialFunc(optFuncs ...dialerOpt) func(string, string) (net.Conn, error) {

|

||||

dialContextFunc := NewDialContextFunc(optFuncs...)

|

||||

return func(network string, addr string) (net.Conn, error) {

|

||||

return dialContextFunc(context.TODO(), network, addr)

|

||||

}

|

||||

}

|

||||

|

||||

type clientConnTracker struct {

|

||||

net.Conn

|

||||

opts *dialerOpts

|

||||

dialerName string

|

||||

event trace.EventLog

|

||||

mu sync.Mutex

|

||||

}

|

||||

|

||||

func dialClientConnTracker(ctx context.Context, network string, addr string, dialerName string, opts *dialerOpts) (net.Conn, error) {

|

||||

var event trace.EventLog

|

||||

if opts.tracing {

|

||||

event = trace.NewEventLog(fmt.Sprintf("net.ClientConn.%s", dialerName), fmt.Sprintf("%v", addr))

|

||||

}

|

||||

if opts.monitoring {

|

||||

reportDialerConnAttempt(dialerName)

|

||||

}

|

||||

conn, err := opts.parentDialContextFunc(ctx, network, addr)

|

||||

if err != nil {

|

||||

if event != nil {

|

||||

event.Errorf("failed dialing: %v", err)

|

||||

event.Finish()

|

||||

}

|

||||

if opts.monitoring {

|

||||

reportDialerConnFailed(dialerName, err)

|

||||

}

|

||||

return nil, err

|

||||

}

|

||||

if event != nil {

|

||||

event.Printf("established: %s -> %s", conn.LocalAddr(), conn.RemoteAddr())

|

||||

}

|

||||

if opts.monitoring {

|

||||

reportDialerConnEstablished(dialerName)

|

||||

}

|

||||

tracker := &clientConnTracker{

|

||||

Conn: conn,

|

||||

opts: opts,

|

||||

dialerName: dialerName,

|

||||

event: event,

|

||||

}

|

||||

return tracker, nil

|

||||

}

|

||||

|

||||

func (ct *clientConnTracker) Close() error {

|

||||

err := ct.Conn.Close()

|

||||

ct.mu.Lock()

|

||||

if ct.event != nil {

|

||||

if err != nil {

|

||||

ct.event.Errorf("failed closing: %v", err)

|

||||

} else {

|

||||

ct.event.Printf("closing")

|

||||

}

|

||||

ct.event.Finish()

|

||||

ct.event = nil

|

||||

}

|

||||

ct.mu.Unlock()

|

||||

if ct.opts.monitoring {

|

||||

reportDialerConnClosed(ct.dialerName)

|

||||

}

|

||||

return err

|

||||

}

|

||||

43

vendor/github.com/mwitkow/go-conntrack/listener_reporter.go

generated

vendored

Normal file

43

vendor/github.com/mwitkow/go-conntrack/listener_reporter.go

generated

vendored

Normal file

|

|

@ -0,0 +1,43 @@

|

|||

// Copyright 2016 Michal Witkowski. All Rights Reserved.

|

||||

// See LICENSE for licensing terms.

|

||||

|

||||

package conntrack

|

||||

|

||||

import prom "github.com/prometheus/client_golang/prometheus"

|

||||

|

||||

var (

|

||||

listenerAcceptedTotal = prom.NewCounterVec(

|

||||

prom.CounterOpts{

|

||||

Namespace: "net",

|

||||

Subsystem: "conntrack",

|

||||

Name: "listener_conn_accepted_total",

|

||||

Help: "Total number of connections opened to the listener of a given name.",